Deep Agents at Scale: The Three Problems You Can’t Ignore

Why context size alone doesn’t solve production agents — and how real systems handle unbounded data, parallel reasoning, and reliable output.

Most discussions of production agents focus heavily on context window size. That framing is incomplete.

Larger context windows are unquestionably valuable—a model capable of processing millions of tokens meaningfully expands what can be handled in a single inference. However, for long‑running, production‑grade agents, the volume of relevant data inevitably grows beyond any fixed window. No matter how large the context becomes, there will always be additional data to collect, inspect, correlate, and transform.

The core challenge, therefore, is not eliminating context limits, but designing systems whose correctness and performance do not depend on fitting all relevant data into the model at once. This shifts the problem from prompt construction to system architecture: how data is collected, how it is processed, and how results are produced in a way users can trust.

A proof‑of‑concept agent can survive on a narrow prompt and a handful of examples. A production agent must operate over arbitrary, noisy, real‑world data—often at substantial scale—while remaining fast, predictable, and correct.

They are often referred to as deep agents. Systems such as Manus, Base44, Figma Make, and Claude Code fall into this category.

The challenge in building deep agents is not a lack of techniques—RAG, vector search, prompt compression, frameworks, and workflows are well covered in the literature—but the absence of guidance on composing these pieces into long-running, resilient systems that operate reliably over unbounded data.

While architectural patterns are beginning to converge—for example, the LangChain deep-agent harness—meeting production requirements around latency, completeness, and cost typically demands domain-specific design choices.

Across different implementations, including those that we have at Sweep.io, deep agents consistently encounter three bottlenecks:

Collecting large volumes of data

Processing large volumes of data

Producing large volumes of output

The remainder of this article presents a concrete approach to addressing each of these challenges.

Context: The implementation discussed here is drawn from our work at Sweep.io, where deep agents are used to analyze and reason over enterprise metadata. The system uses LangChain and LangGraph, but the ideas are framework‑agnostic. Python is used due to the breadth and maturity of its data‑processing and machine‑learning ecosystem.

Problem 1: Collecting and Storing Large Volumes of Data

At Sweep.io, production deep agents must reason over enterprise metadata: schemas, object relationships, dependencies, and historical change signals that describe how complex systems are wired together. As a result, a single agent typically exposes many tools—often dozens. While tool orchestration is a substantial problem in its own right, the unavoidable consequence is data volume: database records, filesystem artifacts, API responses, and search results.

Passing these payloads directly to an LLM—for example, via messages in LangGraph—results in rapid context exhaustion. Common mitigations include persisting data to disk or aggressively compacting tool outputs.

We adopt a different strategy.

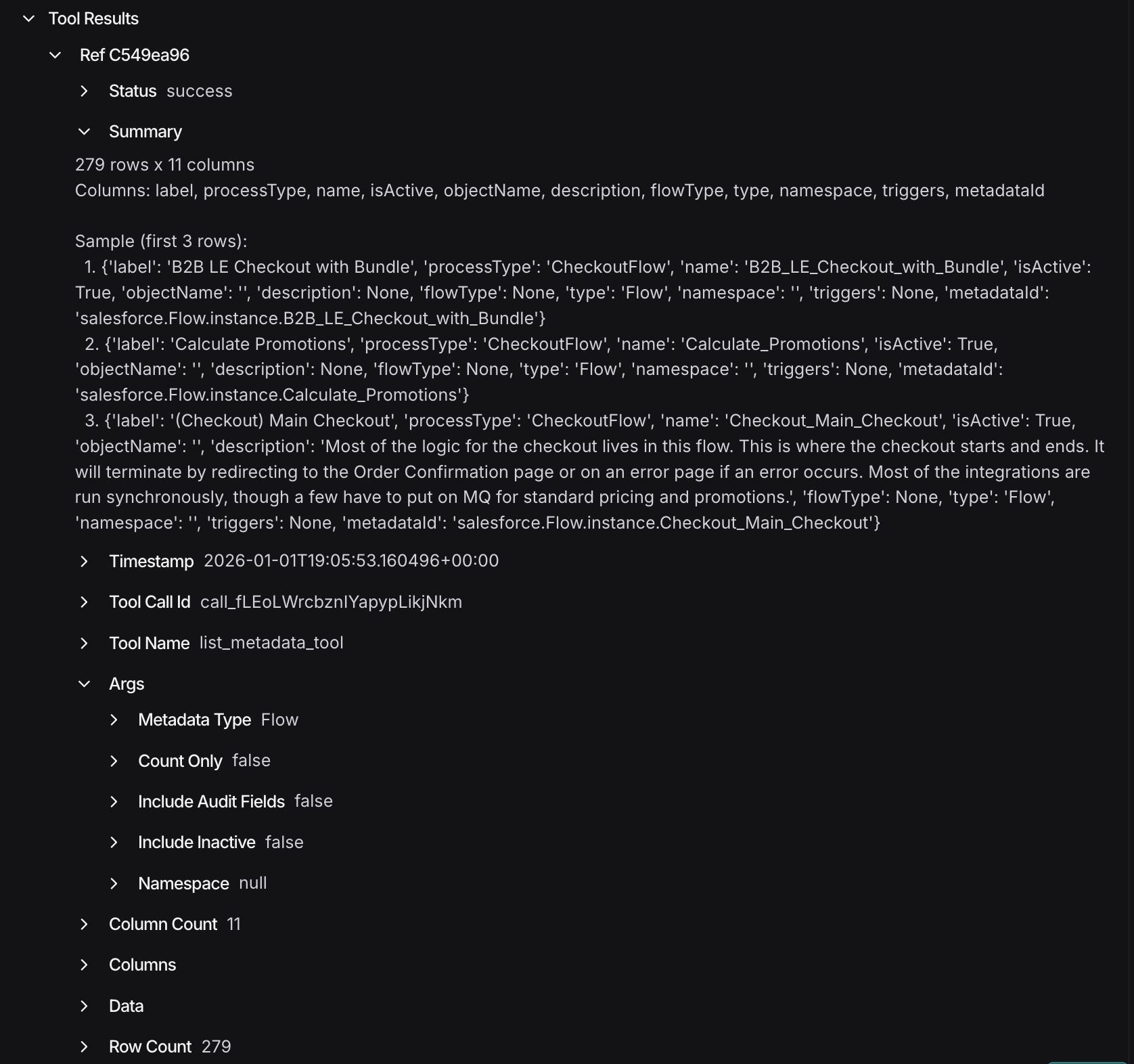

Most tool outputs are inherently structured, and full materialization inside the LLM context is rarely necessary for effective reasoning. Instead of storing raw payloads or external files, tool outputs are normalized into dataframes (for example, using pandas or polars) and stored directly in the agent state on a per‑invocation basis.

Each dataframe is accompanied by lightweight metadata:

Column names

Total row count

A concise summary including a small row sample

When contextual information is required, the LLM is provided only with this summary representation. For example,

In practice, this is sufficient for the model to infer the structure and semantics of the data without incurring the token cost of full materialization.

This design avoids filesystem complexity, substantially reduces token consumption, and keeps data immediately accessible for downstream computation.

Problem 2: Processing Large Volumes of Data

Once data has been collected and summarized, it must be processed. In Sweep.io’s case, users often ask questions whose answers are buried deep in enterprise metadata—across schemas, relationships, and historical changes—and require drawing conclusions from that structure. Answering such questions cannot be done in a single LLM invocation, regardless of context window size.

Instead, computation is decomposed across sub‑agents.

Given a dataset summary, a model is tasked with producing:

Instructions for each sub‑agent

A JSON schema describing the expected output

Each sub‑agent receives:

Its slice of the dataframe

Instructions that serve as a system prompt

A structured output contract defined by the JSON schema

The sub‑agents themselves are deliberately minimal: ReAct‑style agents with structured outputs and no shared global context. They execute in parallel, yielding substantial reductions in end‑to‑end latency.

Parallel execution introduces operational constraints, particularly around rate limits and cost. These are addressed using standard techniques such as model load balancing (for example, via LiteLLM) or controlled, staggered execution.

Multiple orchestration strategies are possible. In our implementation, sub‑agents are invoked via a single tool call that triggers multiple subgraphs. This integrates naturally with the dataframe‑centric state model: aggregation reduces to collecting a list of JSON outputs and persisting them as a standard tool result.

Problem 3: Producing Large Volumes of Output

LLMs are inherently unreliable when asked to emit large volumes of homogeneous data. When asked to generate large tables or lists, models frequently return partial results and indicate that additional output has been omitted.

In production systems, this behavior is unacceptable.

A more reliable approach is to stop asking the LLM to generate the output directly and instead ask it to generate code.

As before, the full dataset cannot be passed back into the model. The dataframe‑plus‑summary representation again becomes critical. The model is provided with:

Column definitions

A small sample of the data

A description of the required transformation and output structure

The model generates a Python script that performs the transformation. This script is executed in a sandboxed environment against the full dataset, producing a deterministic and complete result without relying on the LLM to stream large payloads correctly.

Conclusion: Let LLMs Reason, Not Execute at Scale

At scale, a consistent pattern emerges: LLMs excel at reasoning over summaries and deciding what should happen, but they are unreliable and inefficient when asked to directly manipulate or emit large volumes of data.

The architecture described here follows naturally from that observation:

Large data is kept as structured dataframes in agent state

Work is decomposed into parallel sub‑agents with strict, structured outputs

LLMs are used to generate code for large outputs, rather than generating the outputs themselves

This positions the LLM as a planner and coordinator, while deterministic systems handle bulk data processing. In practice, this enables deep agents that remain predictable and performant at scale—in our case, processing requests touching approximately eight million tokens end‑to‑end in under six minutes without sacrificing correctness.